I've been trying to avoid actually describing what's going on here, for several reasons, first among which is just the time it takes to try and describe this kind of theoretical construct.

I'm not seeing ChatGPT the same way you are, so this conversation is kind of awkward in that neither of is is hearing what the other is actually intending to say.

You seem to think that I view this thing as some form of magic, because that's how everyone talks about it. But I don't. It seems kind of stupid to me, this robot, but just incredible by other measurements.

During the training of the model that comprises it's memory, it watched the context and responses to a trillion WORDS, not ideas, not understandings, not concepts, but just words. Averaging across a billion web pages and social media posts, it learned the exact probabilities for the relationship of each word to the others. This is why it keeps calling itself a LANGUAGE MODEL. That's a literal term. It's intelligence is specific to solving for the assembly of language, based on saturation scanning and an added inference system. This is a hard concept for humans to grasp, the idea of words with absolutely no meaning behind them. (shouldn't be a hard concept, humans do this all the time) But that is how it thinks. There is only the paragraph, which is the output of a function applied to innumerable other paragraphs, and the mind behind them that we think we perceive doesn't exist at all. After being asked for descriptive comment about seals, scan all descriptions of seals, isolate common words and phrases, fit to template, output. I'm drastically oversimplifying, but it's a more realistic description that "it's an intelligence" which has kind of given the public the idea that they are dealing with a genie.

In essence, this is a mechanical turk, using the enormous computing power and information sharing available in the modern age to create an illusion of intelligence so powerful that it can fool the grand majority of the population. It is incapable of original thought, and can only procedurally hybrid language in a chameleon like fashion.

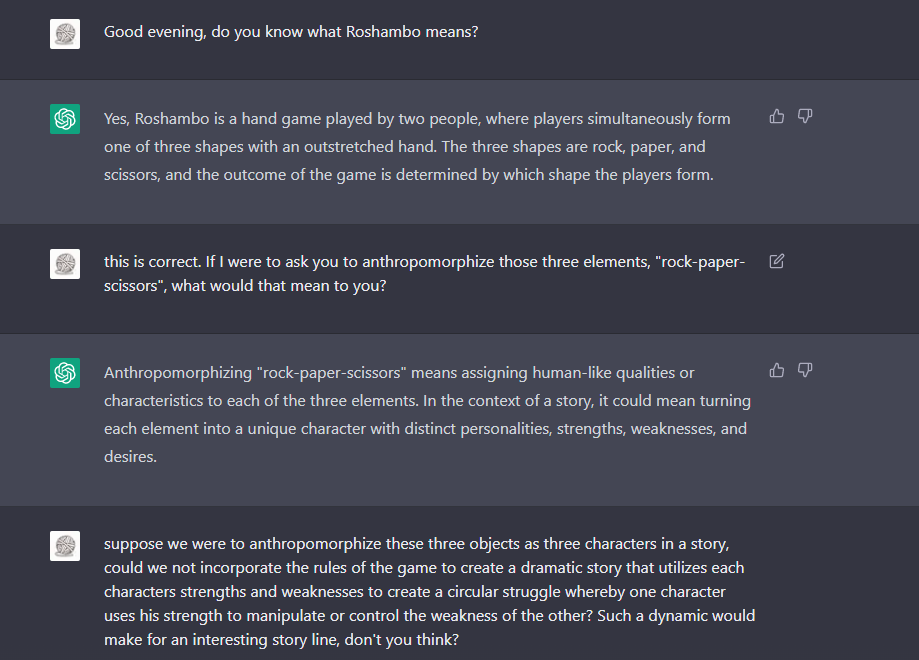

Where it gets interesting is that an illusion, once it becomes powerful enough, can actually affect reality. If the robot can't really think, and it's basically stupid, then why is it giving me all these correct answers, and seemingly pulling off these acts of "creativity". Ok, so what it's doing is understanding the relationships between all elements of our language. That's step one. Greetings follow a template, traffic stops follow a template, shampoo ads follow a template. Each one is a little different, so we need to build a spreadsheet and take an average to reveal what the true core structure of interaction or scenario X is right? Find the mainstream, cull the outliers to get a more stable output. That's step 2. This is perfect work for a mindless machine. Combing billions of data points to isolate the patterns by which we build out speech and text. To sum up AI in general, we're perceiving it in the wrong dimension. It's not solving any difficult problems at all, it's solving the easiest problems imaginable, a billion times a second. Literally 1-1, 0+1, is 3>4, that kind of thing.

So this thing is basically a kind of super parrot, capable of remembering and quoting and rephrasing things it has heard said, similar to most human intelligences. I don't think it's a replacement for authors, or creativity, or nuance, or embedding yourself in a platoon before writing a non fiction book about one, but it's a very good quality parrot.

As to the above examples, I suspect it would have returned something similar to what you said, explaining that there were not centralized factories, or pointing out that information was missing that was necessary to the story problem. You can fill in the blanks for it and it will proceed to solve the problem. It mainly serves as a way to fetch and organize data that is already available. I'd say the main benefit that ChatGPT offers is the same thing I've been using every other AI for for decades, which is just to save time.

I feel like you're picturing some stereotyped dystopia where robots write emotionless books, hollow copies of the soulful poetry written by the legendary scribes of yore, but you don't have anything to worry about, because people won't really buy garbage.